Humanoid Robotics AI Engineer | Real World Deployments | Industrial Systems

IEEE Author

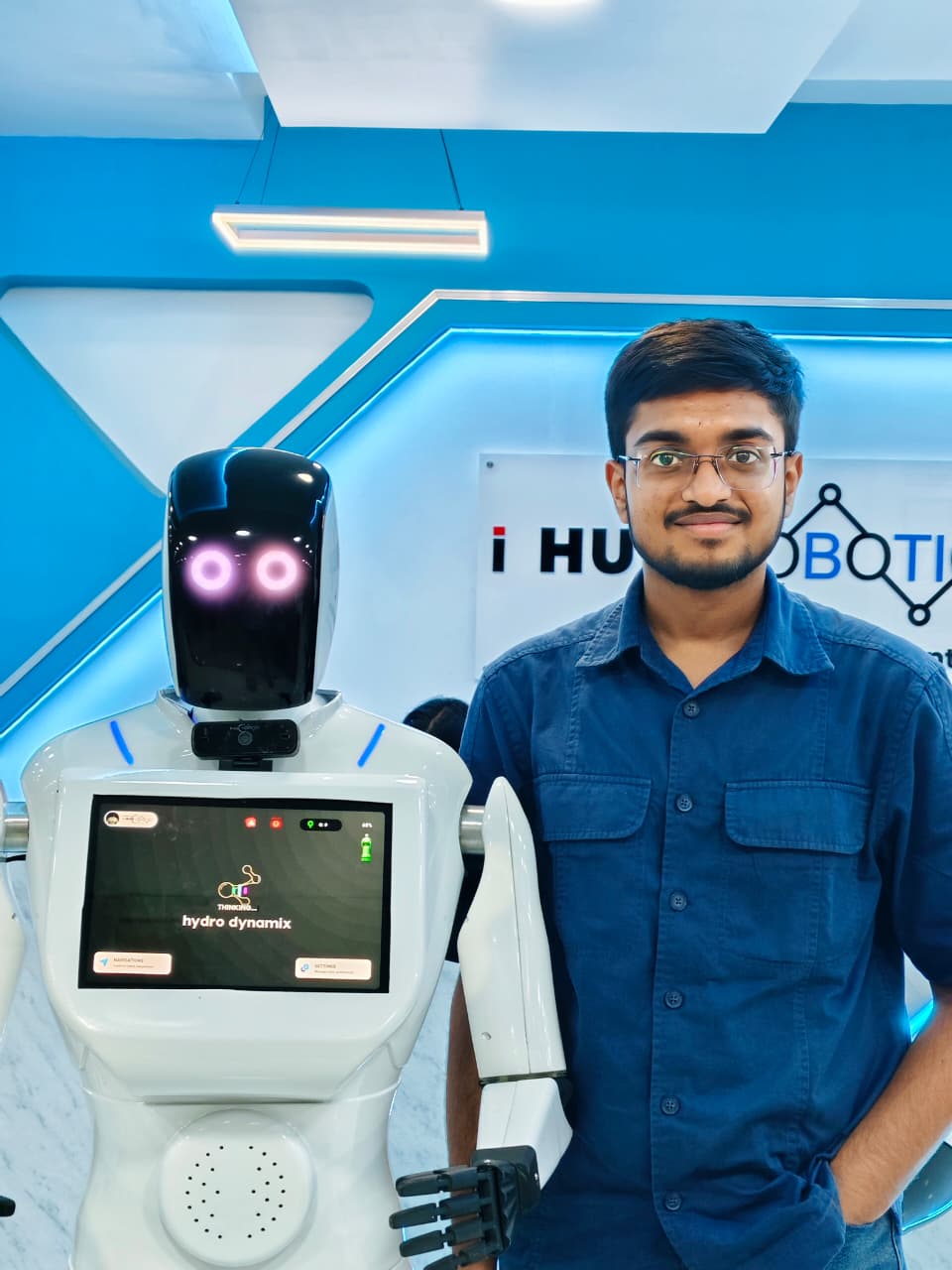

नमस्ते, I'm

Vatsalya Bhadaurya

I work on real-world humanoid robotics systems building perception, behavior, and embodied AI pipelines deployed on physical robots using ROS2, Jetson, and VLM/VLA based reasoning. My focus is on humanoid perception and behavior systems for real robots and real deployments, not simulations.

About Me

My journey in technology began in grade 8 and gradually evolved into a focused interest in applied robotics and AI system design. I work primarily on humanoid robotics, building perception, behavior, and embodied AI systems deployed on real robots rather than simulations.

My experience includes ROS2 based architectures, on device machine learning deployment on Jetson platforms, and multimodal perception systems designed to operate under real world constraints. I have also worked on industrial vision systems for automotive manufacturing, where robustness and reliability were critical.

Alongside industry work, I am an IEEE published researcher focused on multimodal sensing and real time machine learning systems. I am also building MaaKosh, a maternal and neonatal health initiative centered on practical medical devices.

My long term goal is to design intelligent robotic systems that work reliably in real environments by combining research depth with strong engineering discipline.

Experience

- Contributing to the development of AI systems for semi-humanoid robots under the R&D division.

- Collaborate with the core team to integrate AI modules into the robot's ROS framework.

- Participate in real-world/simulated testing and iterate based on feedback.

- Training conversational models for natural interaction, and designing adaptive behavior logic tailored to real-world domains like classrooms and hospitals.

- Leading development of MyGBU Android app and other tech solutions.

- Developing College App (MyGBU) for streamlining student services

- Leading technical development teams and initiatives

- Implementing innovative solutions for campus needs

- Developing Hostel app and tech solutions under DSA department.

- Building GBU Hostels app for college administration

- Creating data-driven dashboards for administration

- Developing automation tools for improved efficiency

- Featured speaker and community contributor for Arduino platform.

- Delivered talks on AI & IoT innovation

- Featured & interviewed by Arduino for outstanding projects

- Conducted workshops on electronics & 3D printing

🏭 Industrial Deployment – Automotive Manufacturing

Humanoid Alloy Defect & Paint Anomaly Detection System

Developed and deployed an industrial humanoid vision system for alloy defect and paint anomaly detection in automotive manufacturing environments. This work required building perception pipelines robust to harsh lighting, noise, motion, and clutter — operating beyond controlled datasets and simulations.

Key Technical Challenges:

- Robustness under factory noise and harsh lighting conditions

- Real-time processing with motion blur compensation

- Deployment in real automotive manufacturing environments

- Integration as part of a humanoid perception pipeline

📚 Research & Publications

Development of a Real-Time Multi-Modal Sensor for Field-Based Microplastic Detection

Proposed a real-time multimodal sensing system combining optical sensing, impedance analysis, and machine learning for field-deployable microplastic detection.

Novel Contributions:

- Multimodal sensor fusion combining optical sensing and impedance analysis

- Machine learning integration for real-time detection and classification

- Field-deployable system designed for practical environmental monitoring

- Real-time processing capabilities for on-site microplastic detection